Pyweek 25 Judges Investigation

Thank you for bearing with me while I investigated some reports of unjustified low scoring in Pyweek 25.

I received a number of complaints about low scores associated with comments including:

- I was completely confused by this entry (etc)

- Yuk

- Yeah.... no...

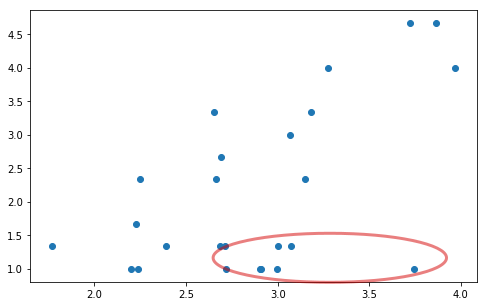

I downloaded the reviews from the Pyweek database and analysed them in a Jupyter notebook. One entrant stood out as responsible for many of the low ratings. However this person was not universally biased. Here's a plot of their ratings (slightly randomised to preserve anonymity), with the overall rating of each game on the x axis and the user's rating on the y axis:

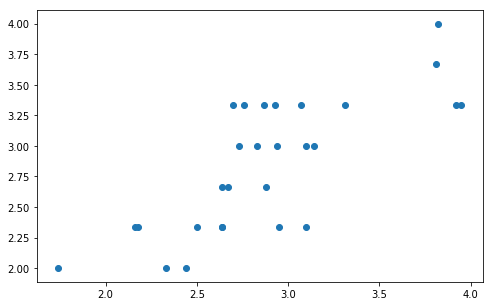

For an unbiased user this distribution should approximate a straight line. The low scores that I've circled appear to be unexpectedly low, although the majority of reviews do correlate well. For comparison, here is a similar plot of my own reviews:

I wanted to construct a more appropriate statistical test than "this looks low". I considered reviews that are more than 2 standard deviations below the mean score for each game. This finds low scores for games that are consistently rated highly, while allowing low scores for games that split the crowd. Under this test I find 14 low reviews.

Some of these reviews are associated with longer or specific comments (The numbers you see are standard deviations from the overall review):

- -2.18448239424969 Can't play on level 2. Screen blinking is too painful.

- -2.459674775249769 Unacceptable behavior by the participant, including the use of sexualized language or imagery and unwelcome sexual attention or advances. This breaks our community guidelines / rules.

- -2.2068053204820104 This game, even when working, its plainly average, average mechanics, average production, average enjoyment. Although the optimizations you pulled made the game silky smooth and I can give credit for that :)

- -2.2051543778147487 I was never a fan of tetris in the first place, but playing this makes me appreciate it. The decision to make the tiles different shapes was a bad one. It was confusing and i'm pretty sure there is no shape to fill in a hole on the very left side of the level. I really wish I could say something positive about this, but I couldn't really find anything that I liked

- -2.3148868868049037 I was completely confused by this entry, the obvious premade assets, the confusing dialog and backgroud, the fact that this pretty much relates nothing to the challenge, ill pass on this.

- -2.478902576381991 Collision detection broken, poor art, I dont even know whats going on. Does not seem to be related in the slightest.

The other category are all very terse and weakly justified:

- -2.02409725481996 Yeah, i get the concept, the implementation is blahhh.

- -2.2387210960990034 Yeah.... no...

- -2.1522169248014134 Almost not playable

- -2.483773282901211 Not really much of a game

- -2.050609665440988 Ehhh... nothing special

- -2.085665361461421 Yuk

- -2.3435206489194926 what what

And this one would appear to be indicate the game did not work:

- -3.1427117740247756 please make a simple, easy way to run the game consistent with the readme.

All of these latter category of reviews are associated with the entrant identified above. Removing some of these reviews (such as the latter category alone) could change the individual competition winner, but this is highly dependent on the criteria used to select which to remove.

Judgement

I believe that these unduly low ratings, specifically when accompanied by no real justification or constructive criticism, are not fair to the entrants who received them.

I believe the entrant who left them did not do so maliciously, as there is no clear pattern to them.

Vacating these specific ratings would change the result of the individual competition, narrowly putting Flip by Tee as the winning entry. Vacating all of the above low ratings including the one gummbum complained about, would result in no change. No other overall positions would change under any scenario I considered.

There is a precedent for vacating some of the ratings, in that Richard has sometimes removed ratings in response to a challenge. However the rules do not explain how such discretion should be applied. I am reluctant to strip winners of their title several days afterwards based on a subjective analysis.

I propose:

- To remove specifically these weakly justified exceptionally low ratings, and adjust tallies etc.

- To appoint Flip as a joint winner of Pyweek 25, alongside The Desert And The Sea.

- To put in place clearer rules around challenges to ratings and the extent of judges discretion in such matters.

- To change the rating system so that ratings are no longer left anonymously, in time for Pyweek 26.

(log in to comment)

Comments

In general, I'm not comfortable with changing the ratings after they were posted, independently on how things would be moved around. The fact that I would benefit from it makes me even more uncomfortable. I feel that we would be violating some sort of integrity in the scores if we change that. People who are not following this discussion would be confused when they come back and see that the scores have changed, and may acquire a negative impression of Pyweek from that.

These sort of things happen and they are not really a big deal, especially here in Pyweek where people are not super competitive and are just here to have fun. Like any sort of rating system, there will always be some random noise and we will never get a "perfect" estimate of the score (whatever "perfect" means). This noise can come from anywhere: for example, maybe someone was hungry when rating some of the games and not when rating others. It's difficult to eliminate this noise and I don't think we should make any efforts to do so after the fact. We can however try to make the scores more accurate before the competition runs, and we can continue to discuss non-anonymous ratings and related changes.

Tee: I agree we can't eliminate noise. The specific concern is not with making the ratings more accurate, but ensuring that entrants have a positive experience when the results are revealed. The dismissive comments coupled with low scores can be very disheartening to receive for people who've spent weeks investing in their entry and the competition. I know you didn't complain, but others did.

I don't buy that there's an issue of integrity in the scoring.

I knew they sucked but I never decided to change them, I wrote them while I was irritated and tired and did not give these games justice.

I would prefer a redesign of the judging system but this has seemed to occur for the past few pyweeks and the admins have seemed to be very out of it, again, this website has never been brought out of the 2000's chaos era of the internet, and seriously needs to be redone both systematically and cosmetically. If the admins wont do this we are better off forking this competition into a new website (pyweek2.org???) and redoing the systems in place dramatically.

seriously needs to be redone both systematically and cosmetically. If the admins wont do this

https://github.com/pyweekorg/pyweekorg/

Just to clarify, I meant integrity as consistency. In general, in any sort of competition (not necessarily Pyweek), if the competition claims that someone is a winner, and then after a few days they backtrack and claim someone else is a winner, this signals to people that there is something wrong with the system. I'm not saying it must never be done; I'm saying that there is a cost to it and the trade-off must be taken into account. There are certain cases (such as cheating) in which I would say it must be done. However, this situation seems like a thin line to me and there are questions whose answers are unclear, at least to me. Is removing outliers really the right approach? How do we make sure that we do not remove justified outliers without being subjective? Is this something that we plan to do every Pyweek, and if not, why would be we doing it now?

1/1/2 with the comment "Yeah i get the concept but the implementation is blahhhh" (mentioned above) and

1/1/1 with the comment "Please update your code to Python 3.X for the betterment of us all"

Both of those scores and comments were REALLY disheartening. I noticed them because all of the other scores tended to be higher and came with good observations, criticisms and ideas for how to make the game better. I appreciate the critical comments because it makes me better. These two just stuck in my head because they seemed unfair and almost arbitrary. I'm didn't think using Python 2.7 would disqualify me or cause me to have a lower score. Maybe I was wrong and I don't understand the rules. I was left frustrated and confused.

I don't want to take away anyone's win - and removing the scores would affect my standing. Maybe if people could explain the low ranking a little better? For the future maybe some statistical analysis could be done to eliminate the really odd scores or if there are very biased judges. Maybe require people to type more than a brief sentence to give someone a really high score or a really low score. That would help us get better. Again, I don't want to disappoint someone who has already won- and if I deserved the low score then that's ok. As a community, I appreciate people talking and helping me understand this better.

All in all, Pyweek has been one of the best weeks of my life. Thank you for continuing it and helping all of us get better. I'll be back next time.

OrionDark7: Thanks for the input! Those comments indeed seem unfair and you are completely right that using Python 2.7 should not have affected your scores at all. I think by removing anonymity we'll take a step forward in improving the feedback quality. We could reevaluate the way the scoring system works as well. I'm glad that you'll be back next time. :)

I wonder if some upvote/downvote functionality on the feedback could help guide people into more constructive comments. It is not about making feedback bland but about making it easier to digest and useable to the developer.

For me, the analysis of the voting / feedback has been a great way to explore the topic but I'd be wary of removing ratings and comments. I would say the line would be crossed only when someone was intentionally voting to "game" the system in their favour (eg vote a clearly strong entry very low to try to improve your own games chances, or entering multiple (bogus) entries to use those entries to vote your own game higher).

There was at least one time in the past when I tried a game and couldn't get past the first (very simple) level. I thought the game was not very good. Later I saw a play-through and realised I completely missed some great content and my rating was definitely way too low.

On the one hand my low ranking was not valid as I missed most of the content. On the other hand one of the design challenges of Pyweek is accounting for the fact that judges have a lot of games to play and limited time, so steep learning curves are risky!

We can still have production, fun, and innovation, but instead you can downvote or upvote the aspects, then at the end add the scores together

Sadly the issue is that I am terrible at web development, so I can really only contribute ideas.

I agree with Tee. Retconning the scores is a drastic measure that should be invoked in the extreme of cheating, or a (cough) bug in the competition calculator but there are no such things. :)

Since I was the one who openly complained, I would like to withdraw the complaint. I'm glad you determined it was not a case of sabotage. I would have hoped not to have such a blight on my entry, but them's the breaks. We're all human, no one is perfect. And in general life is fair, except when it's not. I can live with that. We err when we wax draconian in an endeavor to achieve a utopia.

I like the idea of displaying the Pyweek nickname next to the comment. This detail will help us assess whether we think a behavior needs the attention of the overseers. In this case, had I been able to see the names, I would not have bothered to raise an issue. In other words, anonymity contributed to my seeing a ghost.

Thank you for investigating! You were much more scientific than I could have been. =)

Folks, please take a look at my new post "Gumm's recipes for success!" where I lay down some personal tips for game makers and judges. I hope we all will have some of these in mind as ways to improve our games, and scores by improving the judging experience.

I read all of the text and I agree with an idea from IvanTHoffmann on a previous thread which was adding text fields for every aspect of the rating section. I believe that it would be a nice add on and would decrease the amount of not constructive feedback. But I think we should still keep the general comment section to retain the freedom from before. Based on my current programming experience, I think it should not be too hard to do, But that is just what I think. If nobody has the time to add this, I could make this my next project and try to add 3 more fields which are Fun, Production, and Innovation to the rating page.

Thank You mauve for spending your time on this! :)

mit-mit on

2018/05/09 12:55:

mit-mit on

2018/05/09 12:55:

Hey Mauve: I agree with the proposal to remove exceptional low ratings with no justifications: particularly the one Tee received, as this looks to be more like it was done by accident? (i.e. it's totally inconsistent with the actual game, which probably was the easiest to get running, with minimal dependancies).I think you should re-post the scores after this (if everyone else agrees), and I am more than happy to hand the title over to Tee: if Tee's rating is the highest, he should be declared the individual competition winner alone. Personally I think the overall ratings you get for your game are the more important thing.

I would also officially second the proposal for non-anonymous ratings for pyweek 26 onwards: hopefully with this in place, we won't have to many outliers in future ratings.